There’s been some work in recent years on the differences between implicit and explicit motor learning – that is, the kind of learning the brain does by itself, relying on cues from the environment, vs. using a well-defined strategy to perform a task. For example, learning to carry a full glass of water without spilling by just doing it and getting it wrong a lot until you implicitly work out how, or by explicitly telling yourself, “Ok, I’m going to try to keep the water as level as possible.” A fun little study on this was performed by Mazzoni and Krakauer (2006) in which they showed that giving their participants an explicit strategy in a visuomotor rotation task (reaching to a target where the reach is rotated) actually hurt their performance. Essentially they started off being able to perform the task well using the explicit strategy, which was something like ‘aim for the target to the left of the one you need to hit’. However as the task went on the implicit system doggedly learned it – and conflicted with the explicit strategy – so that the participants were making more errors at the end than at the beginning.

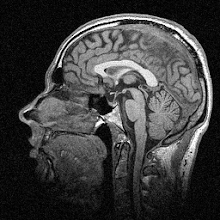

The paper I’m looking at today follows up on this result. Implicit error-based learning is thought to be the province of the cerebellum, the primitive, walnut-shaped bit at the back of the brain. The researchers hit upon the idea that if the cerebellum is important for implicit learning, then perhaps patients with cerebellar impairments would actually find it easier to perform the task relative to healthy control participants. To test this, they told both sets of participants to use an explicit strategy in a visuomotor rotation task, just like in the previous study, and measured their ‘drift’ from the ideal reaching movement.

Below you can see the results (Figure 2A in the paper):

Target error across movements

Open circles are all control participants, whereas filled circles are all patients. The black circles at the start show baseline performance – both groups performed pretty well and similarly. Red circles show the first couple of movements after the rotation was applied, and before participants were told to use the strategy. You can see that the participants are reaching completely the wrong way. The blue section shows reaching while using the strategy. Here’s the nice bit: the cerebellar patients are doing better than the controls, as their error is closer to zero, whereas the controls are steadily drifting away from the intended target. Magenta shows when the participants are asked to stop using the strategy and the final cyan markers show the ‘washout’ phase as both groups get back to baseline without an imposed rotation – though the patients manage much more quickly than the controls.

So it looks very much like the cerebellar patients, because their cerebellums are impaired at implicit learning, are able to perform this task better than healthy people. What’s kind of interesting is that other research has shown that cerebellar patients aren’t very good at forming explicit strategies on their own, which is something that healthy people do without even thinking about it. The tentative conclusion of the researchers is that it’s not so much that the implicit and explicit systems are completely separate, but that the implicit system can inform the development of explicit strategies – which is impaired if the cerebellum isn’t working properly.

I didn’t like everything in this paper. I was particularly frustrated with the method section, which talks about the kind of screen they used. I wasn’t sure whether the images shown to participants were on a screen in front of them or whether the screen was placed over the workspace in a virtual-reality setup; it was unclear. There was also a sentence claiming that the cerebellar patients’ performance was ‘less’ than the controls, when in fact it was better. Other than these minor niggles though, it’s a really nice paper showing a very cool effect.

--

Taylor JA, Klemfuss NM, & Ivry RB (2010). An Explicit Strategy Prevails When the Cerebellum Fails to Compute Movement Errors Cerebellum PMID: 20697860

Images copyright © 2010 Taylor, Klemfuss & Ivry